THE Benchmark: Transferable Representation Learning for Monocular Height Estimation

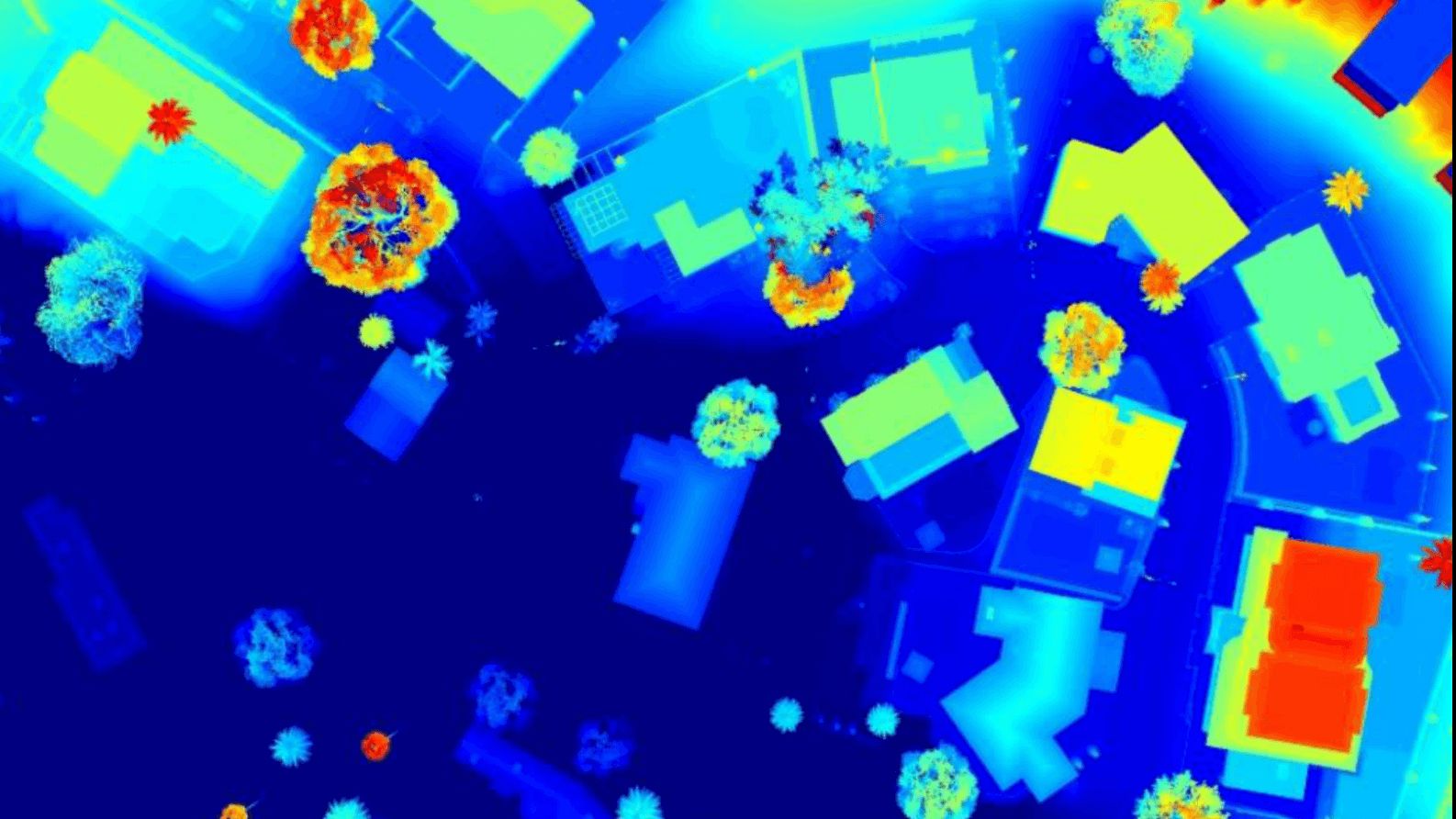

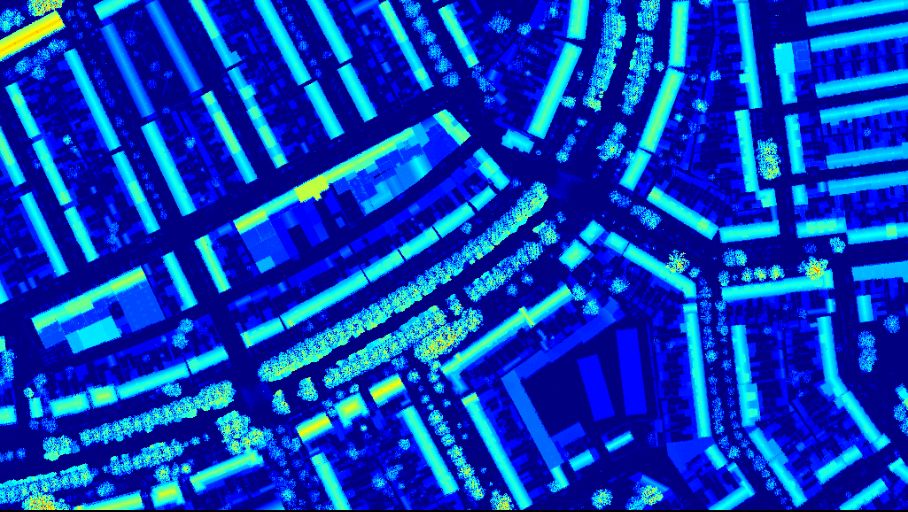

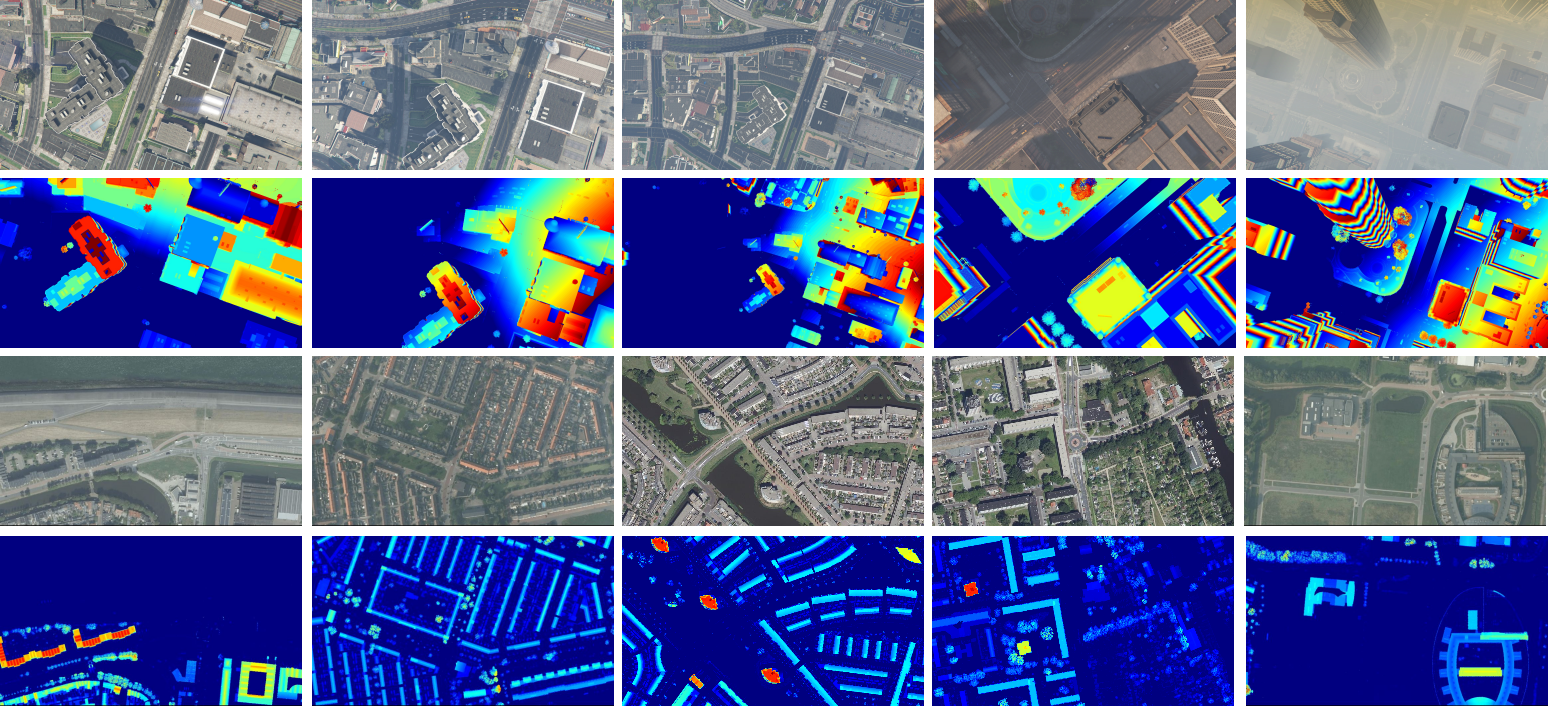

Examples

Synthetic

Aerial

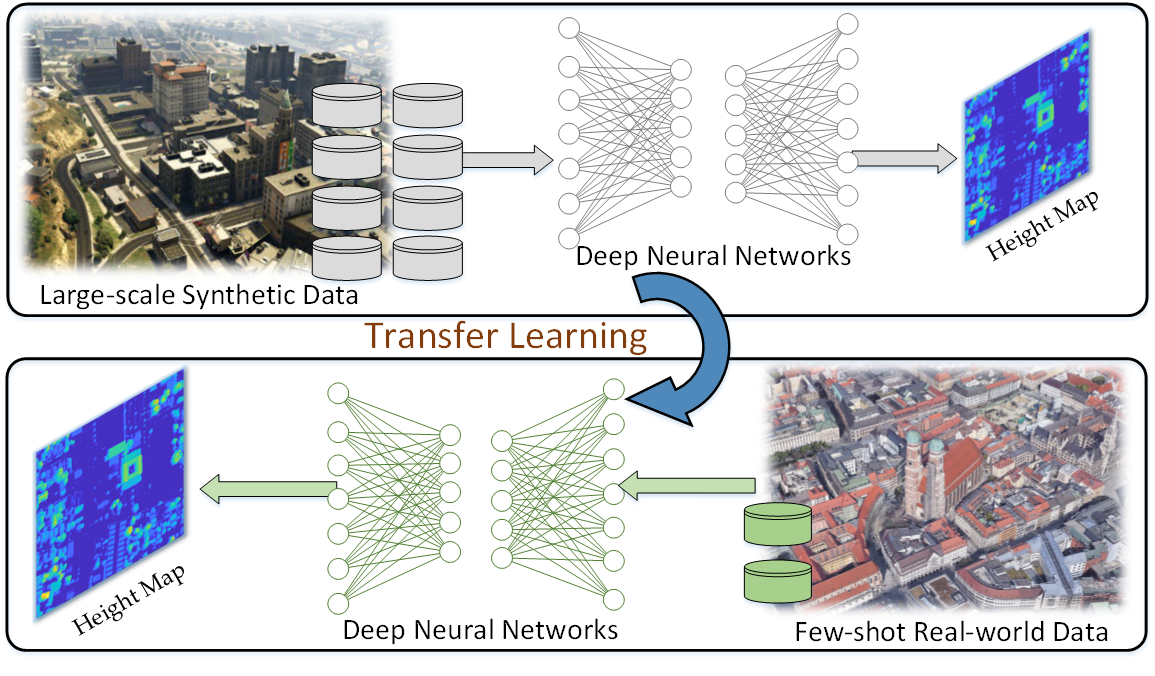

Overview

Generating 3D city models rapidly is crucial for many applications. To obtain large-scale geometric information timely,monocular height estimation is one of the most efficient ways. However, existing works mainly focus on training and testing models onunbiased datasets, which don’t align well with real-world applications. Therefore, we propose a new benchmark dataset to study the transferability of height estimation models in a cross-dataset setting. To this end, we first design and construct a large-scale benchmark dataset for cross-dataset transfer learning on the height estimation task. This benchmark dataset includes a newly-proposed large-scale synthetic dataset, a newly collected real-world dataset, and four existing datasets. Based on this, two new experimental protocols: zero-shot and few-shot cross-dataset transfer are designed. For few-shot cross-dataset transfer, we enhance the window-based Transformer with the proposed scale-deformable convolution module to handle the severe scale-variation problem. To improve the generalizability of deep models in the zero-shot cross-dataset setting, a max-normalization based Transformer network is designed to decouple the relative height map from the absolute heights. Experimental results have demonstrated the effectiveness of the proposed methods in both the traditional and cross-dataset transfer settings.

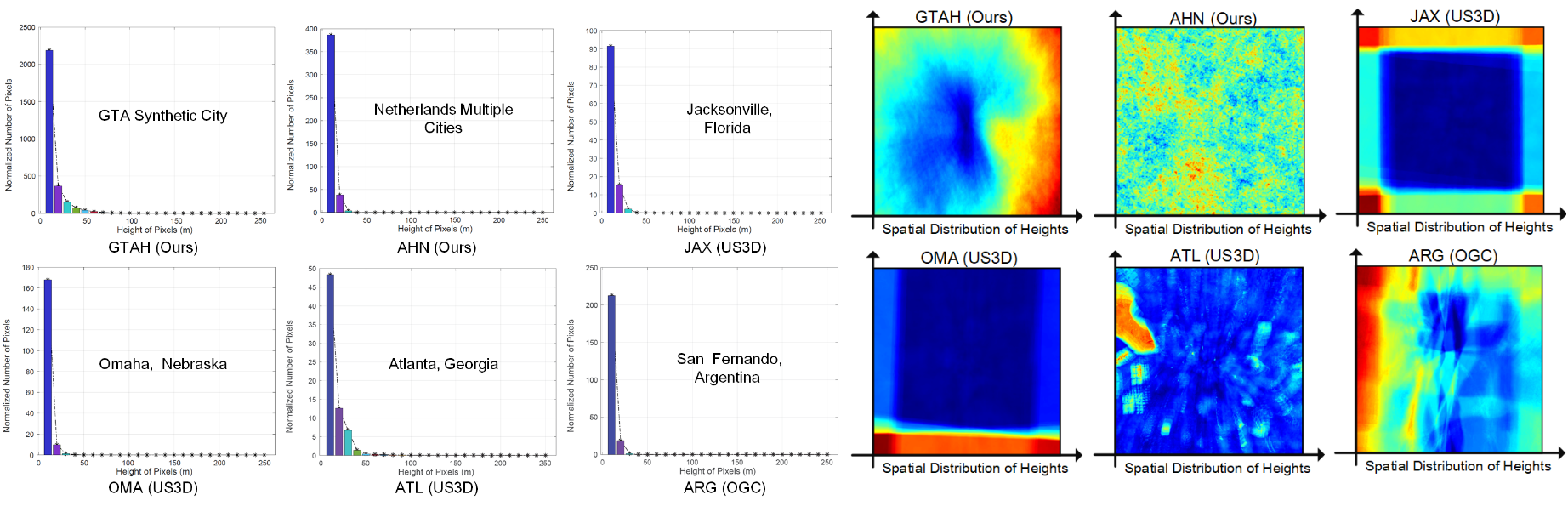

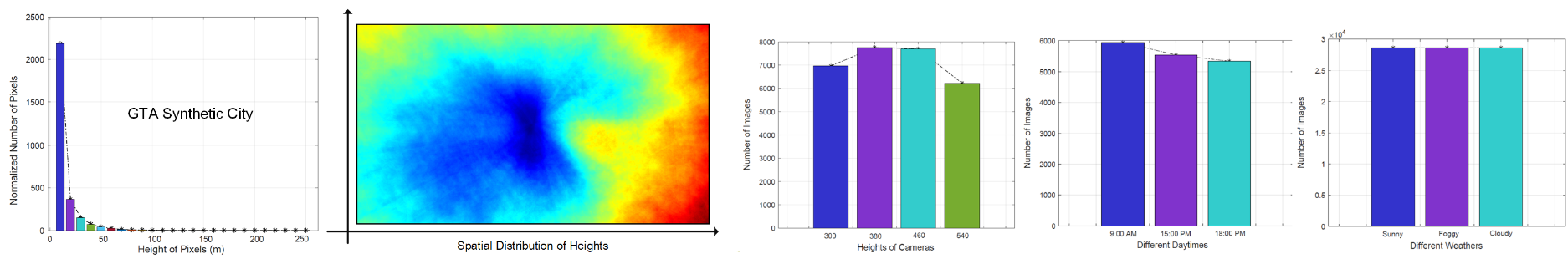

Statistical Results

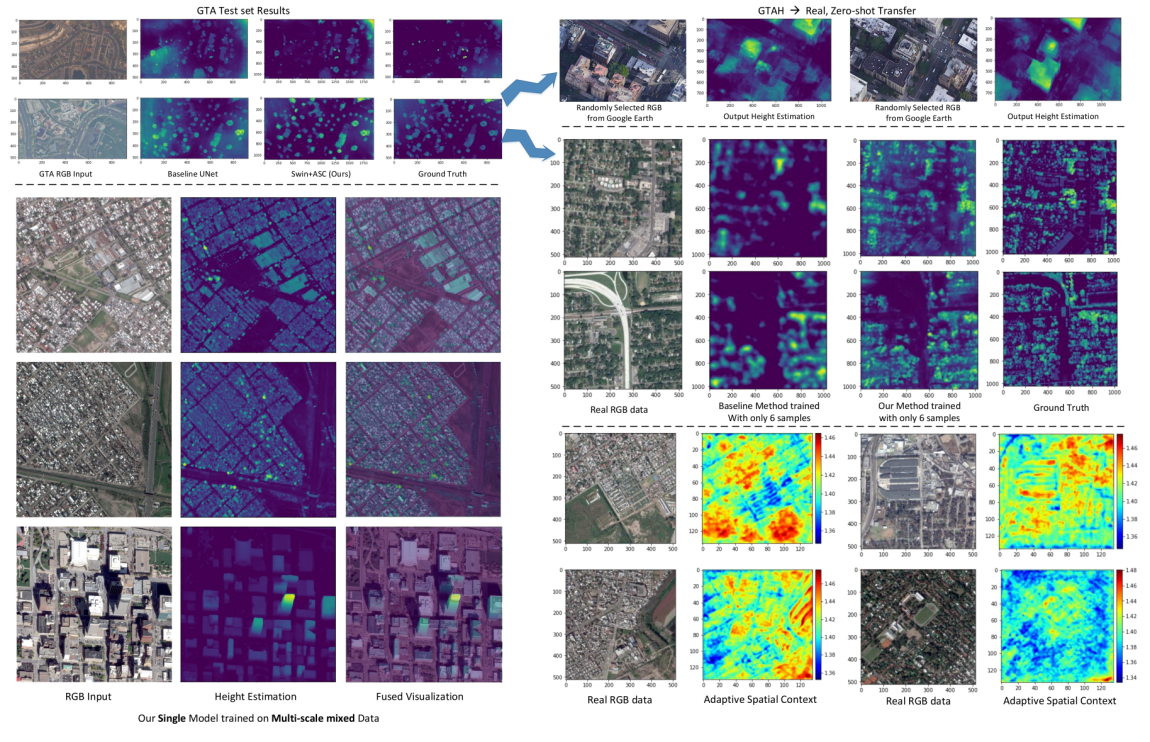

Visualization Results

Citation

if you make use of the Road dataset, please cite our following paper:@inproceedings{, author = {}, title = {}, booktitle = {}, year={2021} }